Market Overview

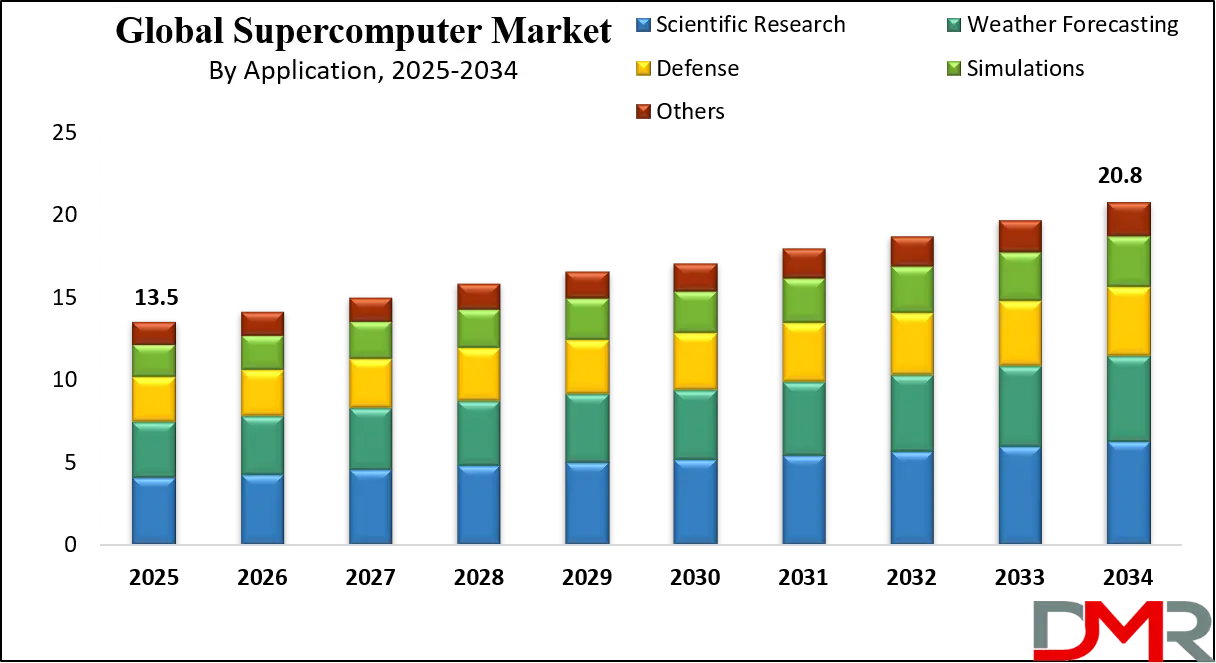

The Global Supercomputer Market size is expected to reach a value of USD 13.5 billion in 2025, and it is further anticipated to reach a market value of USD 20.8 billion by 2035 at a CAGR of 4.9%.

.webp)

The global supercomputer market is crucial for driving innovation across each spectrum of industries, such as scientific research, healthcare, weather forecasting, and financial modeling. Supercomputers, with their superior computational powers and speed, enable the processing of vast datasets and running complex simulations that ordinary computing systems cannot handle. The growth drivers of the market are high-performance computing (HPC), increasing demand for solving intricate problems, and the proliferation of artificial intelligence (AI) and machine learning (ML) technologies.

Governments and companies around the globe are significantly investing in developing advanced supercomputing infrastructure by taking technological advantages like quantum computing, high-speed interconnects, and energy-efficient processors. China and Japan are the dominant players in the Asia-Pacific region, and North America and Europe continue to have impressive influence. However, challenges such as high development and maintenance costs along with the need for skills persist, with significant market growth in store by incorporating the application of cloud-based supercomputing solutions and further increased collaboration between academics and industry.

The supercomputer market in 2025 has a dynamic flavor of innovation combined with healthy competition. Great improvements are already redefining the landscape. Now, with growing AI and big data applications at a tremendous rate, demand is rising with the increasing use of supercomputers for drug discovery, climate modeling, and the development of autonomous vehicles.

IBM, HPE, and NVIDIA are prominent players using such cutting-edge technologies as GPUs and custom architectures. Government-backed initiatives, such as exascale computing projects in the U.S. and China, are pushing the boundaries of computational power. The market is also witnessing a shift towards energy-efficient designs, addressing sustainability concerns as these systems often consume vast amounts of energy.

Cloud-based HPC services are gaining traction, making supercomputing accessible to smaller organizations. Despite these advancements, challenges remain, including supply chain disruptions for critical components like semiconductors and the high costs associated with developing and deploying supercomputers. While all these advances have been made, challenges persist. For instance, the supply chain of critical components such as semiconductors has been disrupted, and is very expensive to develop and deploy supercomputers.

Global leaders are increasingly competing with each other, particularly between the US and China, to gain technological superiority. Overall, the supercomputer market remains very vibrant, with the level of investment, extremely rapid technological evolution, and a focus both on unrelenting computational challenges and environmental challenges.

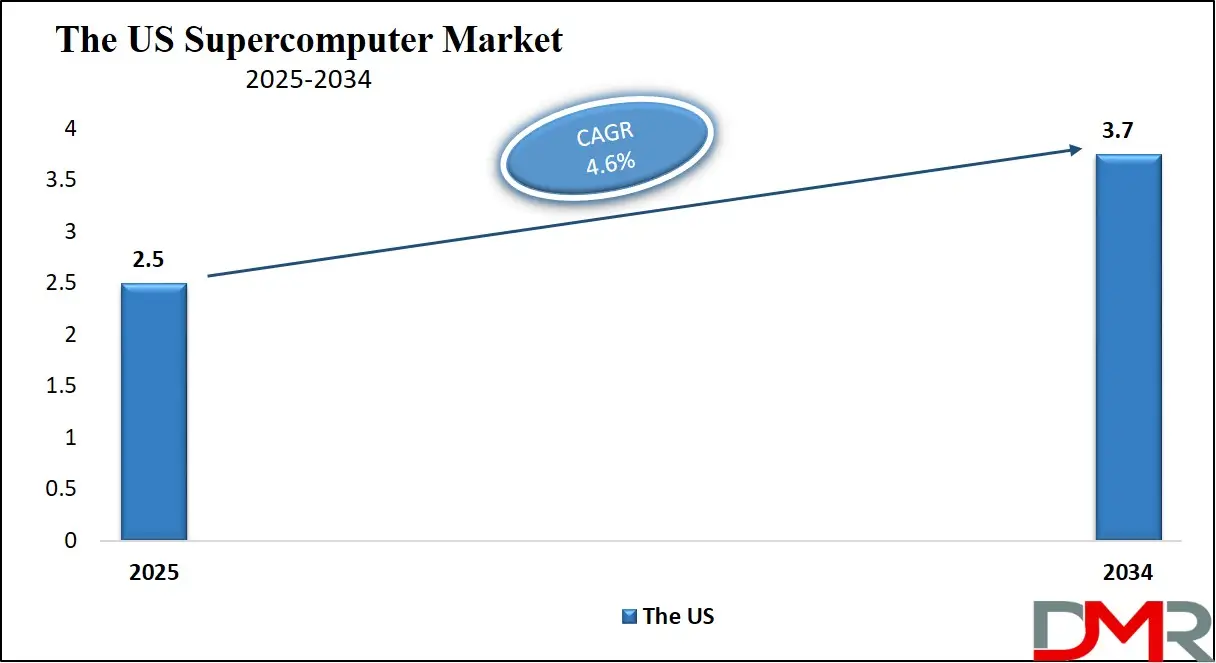

The US Supercomputer Market

The US Supercomputer Market is projected to be valued at USD 2.5 billion in 2025. It is expected to witness subsequent growth in the upcoming period as it holds USD 3.7 billion in 2035 at a CAGR of 4.6%.

The US continues to be an industry leader due to significant high-performance computing expenditures in scientific research, military matters, and industrial purposes. The leading US companies such as NVIDIA, IBM, and Intel have brought a strong impetus to supercomputing by upgrading technology and positioning the country at the top level. A well-established R&D infrastructure does indeed support the market, but a strong competitor such as China along with fewer HPC professionals raises significant concerns.

Supercomputers like Frontier and Aurora have already set benchmarks in computational speed and energy efficiency. They are already creating innovations in AI, machine learning, and data analytics. The Department of Energy continues to fund initiatives that contribute to national security, climate research, and healthcare. With leaders like AWS and Google Cloud at the helm, cloud-based supercomputing services bring HPC to the masses in support of pharmaceuticals, automotive design, and other industries. Apart from government efforts, private enterprises and academic institutions are major contributors to the growth of the HPC ecosystem making the US market strong and highly sustainable.

Key Takeaways

- Market Value: The global supercomputer market size is expected to reach a value of USD 20.8 billion by 2035 from a base value of USD 13.5 billion in 2025 at a CAGR of 4.9%.

- By Operating System Type: Linux is projected to maintain its dominance in the operating system type segment, capturing 70.0% of the market share in 2025.

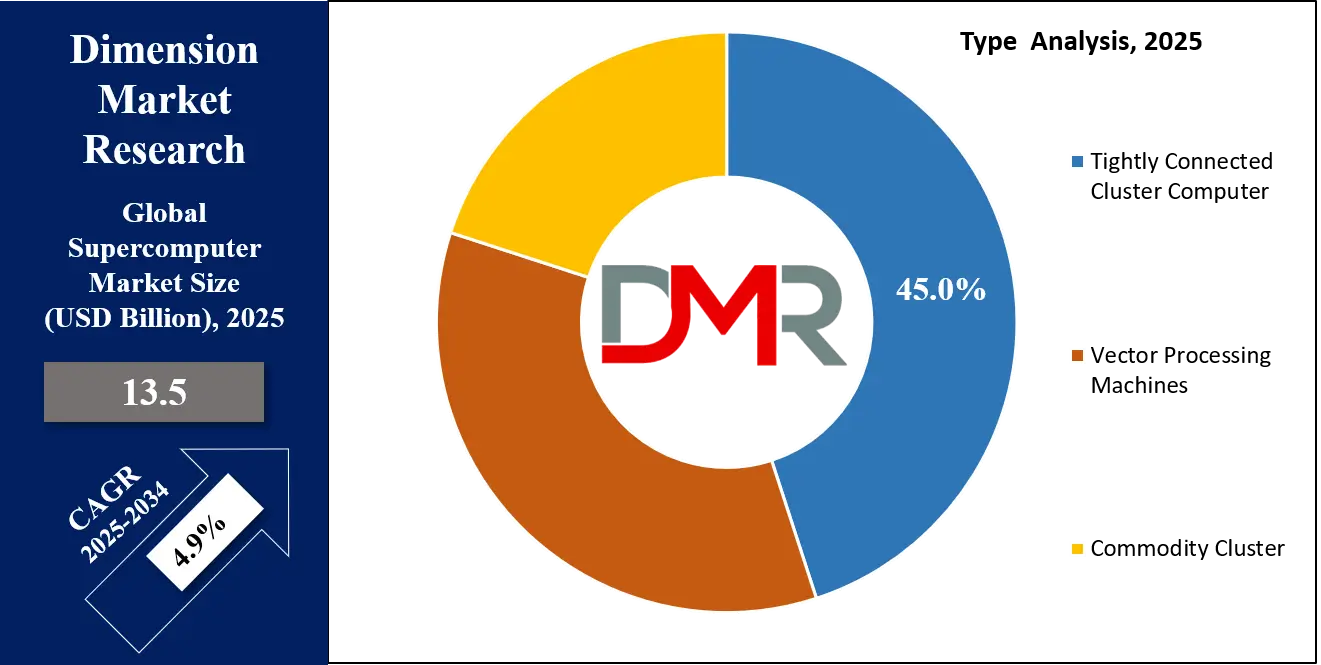

- By Type: Tightly connected cluster computer is projected to dominate the type segment in the global supercomputer market, holding 45.0% of the market share in 2025.

- By Application: Scientific Research is leading the global supercomputer landscape with 30.0% of total global market revenue and it is further anticipated to maintain its dominance by 2025.

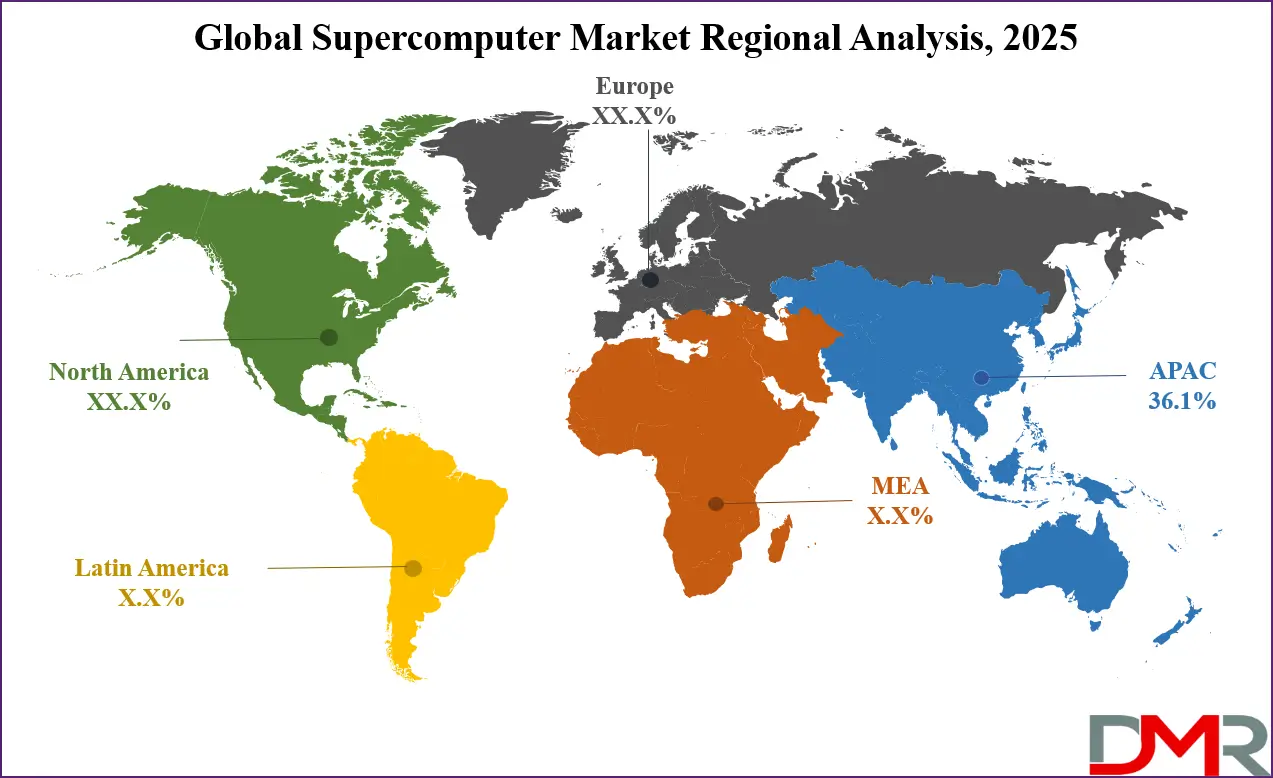

- By Region: Asia Pacific is expected to lead the global supercomputer market with 36.1% of the market share in 2025.

- Key Players: Some major key players in the global supercomputer market are, Atos SE, Cray, Dell Technologies, Fujitsu, and Other Key Players.

Use Cases

- Scientific Research and Climate Modeling: Supercomputers are used to understand complicated scientific phenomena and simulate large-scale processes that are impossible to replicate. In climate science, supercomputers process terabytes of atmospheric, oceanic, and land-use data to create predictive models for climate change, hurricanes, and natural disasters.

- Healthcare and Drug Discovery: Supercomputers allow molecular simulations to find potential drug candidates, significantly reducing the time and cost of traditional drug development. The healthcare industry utilizes supercomputing power to accelerate drug discovery, genome sequencing, and personalized medicine. They also support precision medicine by analyzing patient-specific genetic data, enabling targeted therapies, and advancing cancer treatment.

- Artificial Intelligence and Machine Learning Applications: Supercomputers are training AI and large-scale machine learning models, making the first breakthroughs in areas such as self-driving cars, natural language processing, and robotics. In finance and retail, supercomputers are being used for predictive analytics and fraud detection, and to optimize supply chain operations.

- National Defense and Space Exploration: Governments worldwide use supercomputers for national security, defense simulation, and space exploration advancements. Supercomputers simulate nuclear tests to check the reliability and safety of weapons without having to detonate them physically, facilitating disarmament efforts. In space exploration, agencies such as NASA utilize supercomputers for modeling spacecraft designs, simulating planetary environments, and planning the space mission.

Stats & Facts

- Performance Measurement: Supercomputers are measured in FLOPS (floating-point operations per second). The most powerful supercomputers today can achieve performance levels in exaFLOPS (1 exaFLOP = 10181018 FLOPS).

- Current Leaders: As of 2023, the Frontier supercomputer, located at Oak Ridge National Laboratory in the United States, is the most powerful, with a peak performance of approximately 1.5 exaFLOPS. It uses around 29 megawatts of power.

- Cloud-Based Supercomputing Adoption: Cloud supercomputing is gaining immense popularity, with the market for cloud HPC solutions expected to dominate by 2030. At these moments, leaders like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure are offering scalable HPC resources for anyone from a startup organization to a research institution and even smaller-sized business units.

- Exascale Computing Projects: The race for exascale computing has become a defining feature of the supercomputer market. As of 2025, the United States leads with systems like Frontier, achieving 1.194 exaflops. These projects not only showcase technological superiority but also serve to meet critical applications like weather forecasting, nuclear research, and training AI models.

- Top Supercomputer Rankings: The Top500 list, published semiannually, ranks the top 500 most powerful supercomputers in the world using the Linpack benchmark. As of now, Frontier by USA, Fugaku (Japan), and LUMI (Finland) occupy the top five places.

Market Dynamic

Driving Factors

Rising Demand for High-Performance Computing (HPC)

High-performance computing has become a necessity for industries that need to process huge amounts of data and execute complex simulations. HPC systems in healthcare have changed the way genomic research is done, analyzing terabytes of genetic data to identify mutations causing diseases and tailoring treatments to individual patients. Supercomputers accelerate drug discovery through the simulation of molecular interactions. Researchers can now test thousands of compounds virtually before proceeding to actual physical trials, which saves time and costs. The financial sector also uses HPC for real-time analytics, such as fraud detection, algorithmic trading, and risk assessment.

These systems are essential for quick decision-making, which is the only way in volatile markets to make a profit or avoid losing one in microsecond intervals. As industries grapple with the exponential growth of data from IoT devices, sensors, and business operations, the reliance on HPC continues to surge. The ability of supercomputers to handle immense computational workloads and derive actionable insights positions them as critical assets in driving innovation and efficiency across the global economy.

Advancements in AI and Machine Learning

Artificial intelligence and machine learning are transforming industries at an unprecedented scale, demanding tremendous supercomputing power to handle their computational intensity. With the proliferation of AI throughout industries, supercomputers are assuming an even greater role. Organizations can innovate and improve operational effectiveness while maintaining their competitiveness in an ever more data-dependent world by expediting the analysis of huge volumes of data through supercomputing.

AI-based predictive analytics transform the retail and manufacturing sectors through better supply chains, demand forecasting, and enhanced customer experiences. The healthcare sector makes use of supercomputer-trained AI-driven models in early disease detection, medical image analysis, and personalized treatment plans. For example, training a generative AI model like ChatGPT involves billions of parameters and trillions of calculations, tasks that are executed efficiently on HPC architectures combining CPUs, GPUs, and custom accelerators.

Restraints

High Costs of Development and Maintenance

One of the most capital-intensive areas of technological development is supercomputers. The cost of building one single supercomputing system can run into hundreds of millions of dollars or even exceed a billion dollars, depending on its scale and capabilities. That includes not only the hardware like processors, accelerators, storage systems, and networking equipment but also the infrastructure to house and operate these systems.

The operational cost is also very high because supercomputers consume a lot of electricity. For instance, the Frontier Exascale system in the United States consumes about 21 megawatts of power, which is the same amount used to power thousands of homes. Cooling systems to control the heat from these machines are another energy cost addition. Maintenance also requires a team of experts to ensure the smooth operation of the system, which builds up the operating costs. These added costs make supercomputing difficult for smaller organizations and research institutions to avail, and hence, it does not penetrate the market significantly.

Shortage of Skilled Workforce

There is an acute shortage of skilled professionals on a global level, which stands as a serious bottleneck in adopting and utilizing supercomputers. To work on a supercomputing system, one has to be trained in HPC programming, architecture, and analytics, which is not available across the board. Developing efficient parallel algorithms, optimization of code with specific architectures, and management in the integration of CPUs, GPUs, and accelerators are tough tasks that need extensive training and experience.

increasing demand for HPC specialists has, however not been matched by educational institutions and training programs. The talent gap is most evident when supercomputing applications expand into emerging fields like artificial intelligence, quantum computing, and big data analytics. Organizations find it hard to recruit people with cross-disciplinary expertise like AI model training with HPC optimization. There is also rapid evolution of supercomputing technologies, requiring constant skill upgrades. This creates a challenge both for the workforce and the employers.

Opportunities

Expansion of Cloud-Based Supercomputing

Cloud-based HPC services are revolutionizing the supercomputing market landscape by making powerful computing accessible and affordable. Traditional supercomputing involves huge investments in hardware, infrastructure, and highly skilled personnel, which is not possible for smaller organizations, startups, or academic institutions. Cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud are filling this gap by providing HPC-as-a-Service.

The benefit of these platforms is that users can access powerful computing resources on a single pay, eliminating capital expenditure and lowering entry barriers. Scalability is one of the key advantages of cloud-based HPC, it allows the user to dynamically allocate resources according to project requirements. For example, an AI model developed by a startup can use heavy cloud resources in training phases and reduce them during lower activity periods for cost optimization. Moreover, advances in secure data sharing and remote collaboration through the cloud are further augmenting its adoption.

Adoption of Energy-Efficient Technologies

As energy costs rise and environmental concerns grow, energy-efficient technologies are emerging as a cornerstone of supercomputing innovation. The adoption of GPUs and hybrid architectures has also enhanced energy efficiency. GPUs are specifically designed for parallel processing, and they can execute computations much faster than traditional CPUs with much less power consumption. Hybrid architectures integrate GPUs, CPUs, and accelerators to optimize resource utilization and reduce energy costs.

Governments and organizations globally are focusing on green supercomputing initiatives to meet sustainability goals. For instance, Fugaku (Japan) and Frontier (USA) have been designed with energy-efficient systems that maximize performance while minimizing environmental impact. Sustainable focus has led the way towards operational cost reduction. On the other hand, sustainability also attracts investments from conscious earth-loving enterprises and governments.

Trends

Rise of Exascale Computing

Exascale computing refers to the systems capable of performing at least one quintillion, or 10^18, calculations per second. Systems will be able to tackle problems with more accurate climate modeling, advanced simulations for materials science, and training of complex AI models. The United States is leading the charge with its Frontier system, which reached exascale performance in 2022. Its capabilities are now enabling faster, more efficient research in fields such as energy, climate change, and healthcare.

Similarly, China and Europe are also working hard to develop their exascale systems, like TaihuLight and the EuroHPC program. This is not only a shift in the computational capability of countries but also shows the geopolitical importance of supercomputing. The potential applications of these systems include significantly improving weather forecasting models to mitigate the impact of natural disasters, simulating nuclear reactions for safety purposes, and furthering AI development in national defense.

Integration of AI with HPC

The integration of AI with High-Performance Computing (HPC) is fundamentally transforming the ways industries approach technology advancement and problem-solving. Deep and machine learning-based algorithms demand an enormous amount of computation to deal with massive amounts of data or complex models and, therefore are often computed in supercomputers that can have parallel processing to any scale required. This convergence of AI and HPC is enabling many breakthroughs across various fields such as health, autonomous vehicle development, robotics, and finance.

AI-driven supercomputing is revolutionizing drug discovery and genomics research in healthcare by simulating molecular interactions and predicting treatment responses. This significantly cuts down on the time taken for research and allows the faster development of personalized medicines. The integration of AI and HPC is also improving data analysis capabilities. For instance, in climate modeling, AI-driven simulations on supercomputers can predict weather patterns much more accurately, while in finance, AI algorithms optimize trading strategies and risk management in real time.

Research Scope and Analysis

By Operating System Type

Linux is projected to maintain its dominance in the operating system type segment, capturing 70.0% of the market share in 2025 driven by its adaptability, performance, and widespread adoption across the scientific, industrial, and government sectors. Because of the open-source nature of Linux, the operating system is highly customizable and flexible. This has been the ideal environment for tailoring the needs of high-performance computing (HPC). In this regard, supercomputers, which handle tasks such as climate modeling, molecular simulations, and artificial intelligence workloads need to be customized for every specialized and complex task.

Performance and scalability make Linux a natural fit for large parallel computing architectures. Supercomputers often consist of thousands of interconnected nodes and rely on Linux for its efficient resource management, low-latency networking, and robust support for distributed computing. In addition, the widespread adoption of Linux in high-performance computing creates a positive feedback loop, as researchers and developers focus their efforts on refining Linux-based solutions, further solidifying its market leadership.

The other crucial factor that contributed to Linux supremacy is cost-effectiveness. As an open-source platform, it offers an alternative that is far more economical than proprietary operating systems, making it attractive for organizations investing in the installation of supercomputing infrastructure. Moreover, Linux’s reputation for security and reliability makes it a trusted choice for critical applications, such as national defense simulations and pharmaceutical research, where stability and data protection are paramount.

By Type

Tightly connected cluster computer is anticipated to dominate the type segment in the global supercomputer market, holding 45.0% of the market share in 2025. This dominance is a reflection of their efficiency, scalability, and ability to handle the complex computational demands of modern high-performance computing (HPC) applications. A tightly connected cluster computer is highly interconnected and optimized for communication between nodes.

Such an architecture ensures that there is minimal latency in its nodes, but the transfer rates are very high. Applications of such systems are those with highly interdependent tasks. Examples include climate modeling, particle physics simulations, and advanced materials research, in which the result of one computation directly influences the next.

Another feature that makes these systems appealing is their scalability, especially in tightly connected cluster computers. The number of nodes can be increased as the computational needs grow, without desired reconfiguration. This is very useful in research and industrial environments, where workloads are constantly changing and new challenges demand more computing power.

In addition, versatility contributes to the predominance of strongly connected cluster computers. Cluster computers are no longer limited to scientific research only, they are being increasingly used in industrial applications such as oil and gas exploration, financial modeling, and artificial intelligence training.

By Application

Scientific Research is anticipated to lead the global supercomputer landscape with 30.0% of total global market revenue and it is further anticipated to maintain its dominance by 2025. This leadership emphasizes how supercomputers play a leading role in moving forward scientific discovery and addressing some of the complex global problems. To scientists and researchers who have to address issues that require enormous computational power, it has become a very essential tool.

In the case of climatology, supercomputers can simulate detailed models of weather patterns, ocean currents, and global climate systems in detail, revealing critical points on climate change and potential impacts. Likewise, astrophysical simulations by supercomputers facilitate the study of cosmic phenomena that cannot be analyzed due to complexity.

Government and international organizations also have significant investments in scientific research that contribute in the supercomputer market. Most countries see supercomputing as a strategic asset to advance their scientific and technological capabilities. Publicly funded supercomputing centers provide researchers with access to state-of-the-art resources, fostering groundbreaking discoveries and maintaining global competitiveness. By 2025, the increasing needs of areas that include artificial intelligence, quantum computing, and medicine tailored to patients' genetic fingerprints will demand significantly more supercomputer power. The increasing dependence of research across the disciplines on HPC is among the drivers that propel this trend.

The Supercomputer Market Report is segmented on the basis of the following:

By Operating System

By Type

- Tightly Connected Cluster Computer

- Vector Processing Machines

- Commodity Cluster

By Application

- Scientific Research

- Weather Forecasting

- Defense

- Simulations

- Others

By End User

- Commercial Industries

- Government Entities

- Research Institutions

Regional Analysis

The Asia Pacific region is poised to lead the global supercomputer market with 36.1% of the market share in 2025, reflecting its growing investments in high-performance computing (HPC) infrastructure and technological innovation. This dominance is driven by the region’s emphasis on scientific research, industrial applications, and strategic government initiatives aimed at strengthening technological capabilities. China, Japan, South Korea, and India are among the countries leading the market growth.

China has been at the forefront of the supercomputing market globally, with many of its supercomputers ranking among the world's best, in the TOP 500 list. The massive investment by the Chinese government in HPC is part of a larger plan to strengthen the country's positions in artificial intelligence, quantum computing, and advanced manufacturing. These supercomputers are used in applications ranging from weather forecasting to aerospace engineering, from energy exploration to national security.

The dominance of the Asia Pacific region can also be attributed to the rapidly rising need for supercomputing industries in the region. The energy, pharmaceutical, and automotive manufacturing sectors in the region are expanding, meaning there is a big need for advanced computational power.

Additionally, the region's tech leaders and start-ups are increasingly adopting supercomputing resources to drive innovation in artificial intelligence, machine learning, and big data analytics. Besides industrial and research applications, the region's governments consider supercomputing a strategic asset for national security and economic competitiveness. This leadership will not only shape the future of HPC but also influence global technological advancements across diverse fields.

By Region

North America

Europe

- Germany

- The U.K.

- France

- Italy

- Russia

- Spain

- Benelux

- Nordic

- Rest of Europe

Asia-Pacific

- China

- Japan

- South Korea

- India

- ANZ

- ASEAN

- Rest of Asia-Pacific

Latin America

- Brazil

- Mexico

- Argentina

- Colombia

- Rest of Latin America

Middle East & Africa

- Saudi Arabia

- UAE

- South Africa

- Israel

- Egypt

- Rest of MEA

Competitive Landscape

The global supercomputer market is a highly dynamic competitive landscape marked by intense rivalry among key players, regional dominance, and a relentless focus on technological innovation. The competitive advantage in the supercomputer market is mainly driven by technological innovation. Projects such as the US's Frontier and China's Sunway OceanLight are at the forefront of this achievement. The integration of AI into HPC systems has provided new avenues for complex workloads such as real-time data analysis and machine learning training.

Energy efficiency is also becoming a hot area of research because organizations need to reduce the cost of operation and carbon footprint. Advances in cooling technologies and processor architectures will be able to produce efficient supercomputers, which align with global sustainability goals.

Advances in Japan, like the Fugaku supercomputer, have highlighted the commitment of the region toward solving societal challenges in the fields of disaster mitigation and healthcare optimization. North America, meanwhile, continues to be the epicenter of innovation with huge contributions coming from the likes of NVIDIA, AMD, and IBM from the U.S., further boosted by substantial government funding in HPC applications for defense, energy, and scientific research. Europe focuses on cooperation through programs such as the EuroHPC Joint Undertaking, which has an emphasis on energy efficiency and sustainable supercomputing.

Some of the prominent players in the Global Supercomputer Market are:

- Atos SE

- Cray

- Dell Technologies

- Fujitsu

- Hewlett Packard Enterprise

- Honeywell International Inc.

- International Business Machines Corporation

- Lenovo

- NEC Corporation

- Nvidia Corporation

- Other Key Players

Recent Developments

- January 2025: At CES in January 2025, NVIDIA unveiled Project DIGITS, a personal AI supercomputer designed for AI researchers, data scientists, and students. Powered by the new GB10 Grace Blackwell Superchip, developed in collaboration with MediaTek, Project DIGITS is scheduled for release in May 2025, with prices starting at $3,000.

- January 2025: MediaTek announced its collaboration with NVIDIA on the design of the GB10 Grace Blackwell Superchip, which powers NVIDIA's Project DIGITS. This partnership highlights MediaTek's entry into the AI supercomputer market and underscores the industry's trend toward collaborative development of advanced computing technologies.

- December 2024: Italian energy company Eni launched HPC6, a €100 million supercomputer located in Ferrera Erbognone, Italy. Featuring nearly 14,000 AMD graphics processing units, HPC6 ranks among the world's fastest supercomputers.

- December 2024: NVIDIA completed its acquisition of Run: ai, a company specializing in AI workload orchestration. This strategic move aims to enhance NVIDIA's capabilities in managing and optimizing AI workloads across supercomputing platforms, reinforcing its position in the AI supercomputer market.

- November 2024: SoftBank's Japanese telecom unit became the first to receive NVIDIA's latest Blackwell design chips for its supercomputer. This deployment is part of SoftBank's strategy to enhance its AI capabilities and develop a supercomputer to support both 5G and AI services, reflecting the convergence of telecommunications and AI technologies.

Report Details

| Report Characteristics |

| Market Size (2025) |

USD 13.5 Bn |

| Forecast Value (2034) |

USD 20.8 Bn |

| CAGR (2025–2034) |

4.9% |

| The US Market Size (2025) |

USD 2.5 Bn |

| Historical Data |

2019 – 2024 |

| Forecast Data |

2026 – 2034 |

| Base Year |

2024 |

| Estimate Year |

2025 |

| Report Coverage |

Market Revenue Estimation, Market Dynamics, Competitive Landscape, Growth Factors, etc. |

| Segments Covered |

By Operating System (Linux, and Unix), By Type (Tightly Connected Cluster Computer, Vector Processing Machines, and Commodity Cluster), By Application (Scientific Research, Weather Forecasting, Defense, Simulations, and Others) and By End User (Commercial Industries, Government Entities, and Research Institutions) |

| Regional Coverage |

North America – US, Canada; Europe – Germany, UK, France, Russia, Spain, Italy, Benelux, Nordic, Rest of Europe; Asia-Pacific – China, Japan, South Korea, India, ANZ, ASEAN, Rest of APAC; Latin America – Brazil, Mexico, Argentina, Colombia, Rest of Latin America; Middle East & Africa – Saudi Arabia, UAE, South Africa, Turkey, Egypt, Israel, Rest of MEA |

| Prominent Players |

Atos SE, Cray, Dell Technologies, Fujitsu, Hewlett Packard Enterprise, Honeywell International Inc., and Other Key Players |

| Purchase Options |

We have three licenses to opt for: Single User License (Limited to 1 user), Multi-User License (Up to 5 Users), and Corporate Use License (Unlimited User) along with free report customization equivalent to 0 analyst working days, 3 analysts working days, and 5 analysts working days respectively. |